Geo4D: Leveraging Video Generators for Geometric 4D Scene Reconstruction

A method to repurpose video diffusion models for monocular 3D reconstruction of dynamic scenes.

A method to repurpose video diffusion models for monocular 3D reconstruction of dynamic scenes.

A feed-forward approach to increase the likelihood that the 3D generator outputs stable 3D objects directly.

A model for completed 3D reconstruction from partially visible inputs.

An interactive video generative model that can serve as a motion prior for part-level dynamics.

Employing explicit correspondence matching as a geometry prior enables NeRF to generalize across scenes.

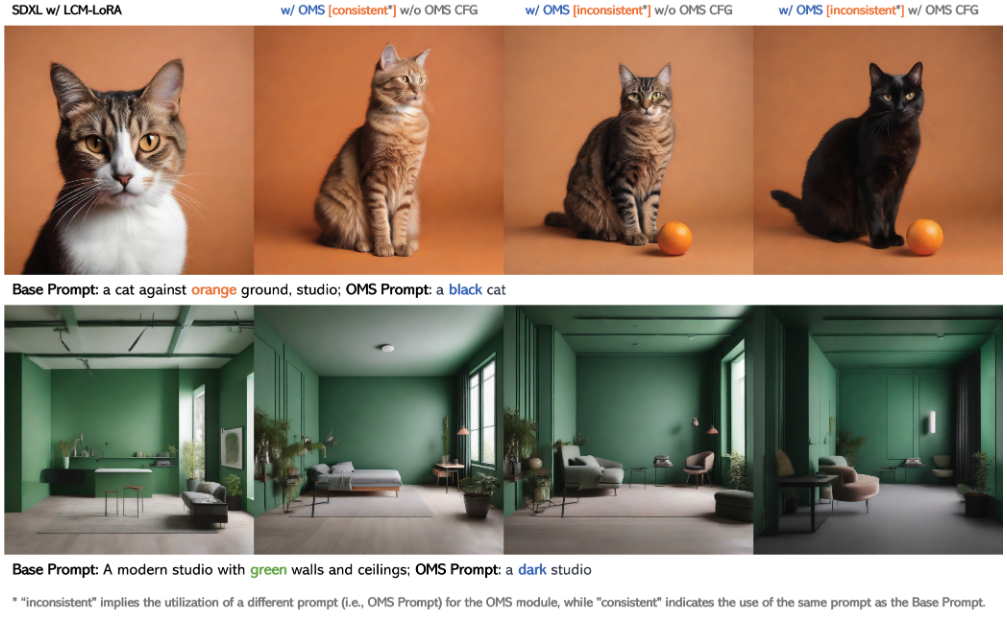

Semantic Style Transfer that simultaneously guides both style and appearance transfer.

A fast, super efficient, trainable on a single GPU in one day for scene 3D reconstruction from a single image.

A unified framework to recover better 2D appearance and 2.5D geometry.

A Feed-Forward model that reconstruct 3D structure and apperance with uncalibrated images.

Probe large vision models to determine to what extent they 'understand' different physical properties in an image.

A feed-forward approach for 360-degree scene-level novel view synthesis using only sparse observations.

A feed-forward approach for efficiently predicting 3D Gaussians from sparse multi-view images in a single forward pass.

A physical interaction with objects in vision for part-level dragging.

A self-organized 3D segmentation/decomposition model via neural implicit surface representation.

A method to synthesize consistent novel views from a single image on open-set categories without the need of explicit 3D representations.

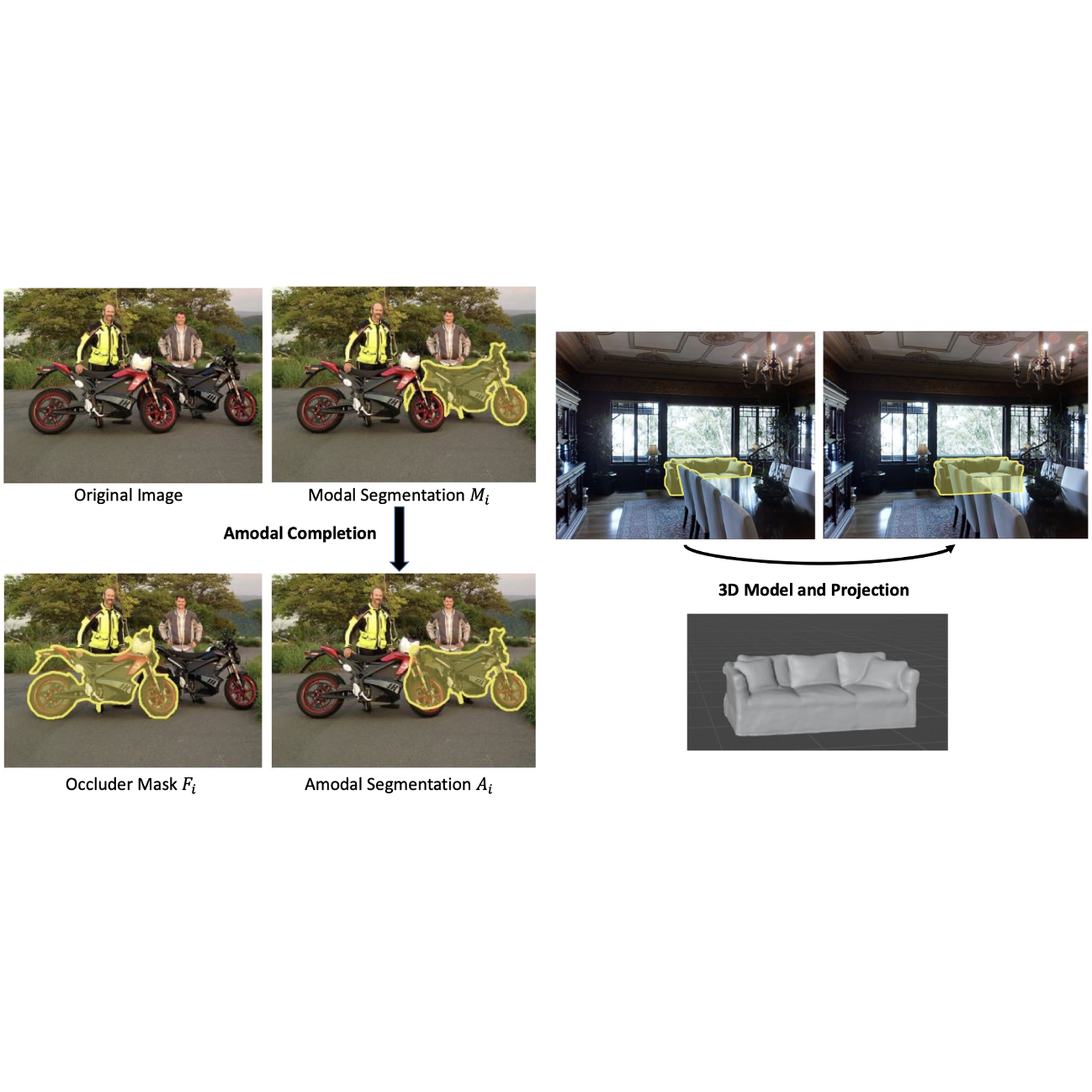

Setting up a Stable Diffusion based network to solve the amodal completion problem for any category and without occluder mask provided.

A versatile plug-and-play module to fix the scheduler flaws for diffusion models.

An indoor panorama outpainting model using latent diffusion models with view-consistent.

PICFormer achieves pluralistic image completion with multiple and diverse solutions using a transformer based architecture.

A generalized framework for multi-modality control based on text-to-image generation.

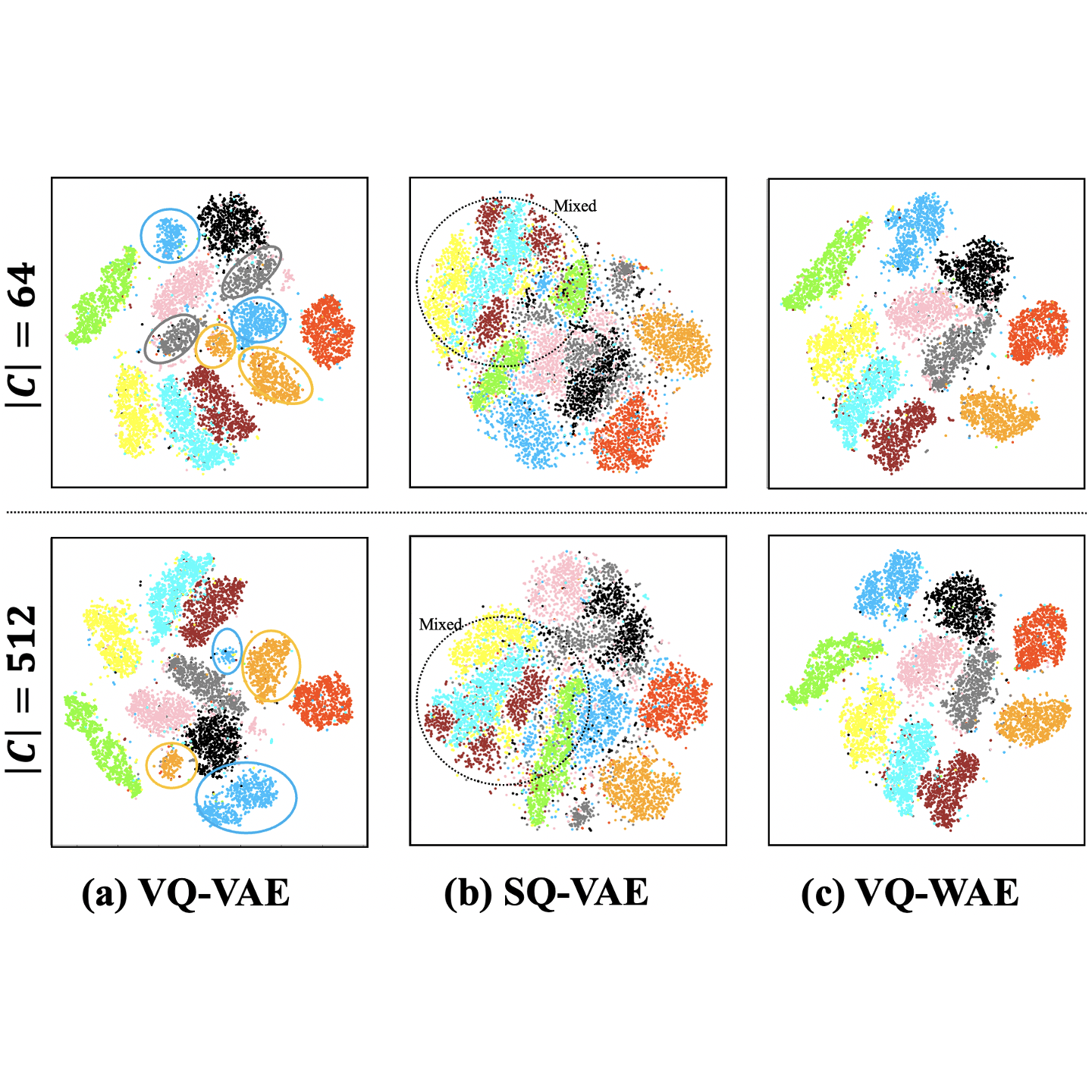

A simple approach to avoid codebook collapse and achive 100% codebook utilisation.

Minimize the codebook-data distortion as the Wasserstein distance.

A unified discrete diffusion model for simultaneous vision-language generation.

A spatially conditional normalization is introduced to address the repeated artifacts in vector quantized methods.

Automatically decompose a scene into 3D instance, trained using only 2D semantic lables and images.

A model that transfers the 2D semantic map into 3D NeRF, and lets users edit 3D model through 2D semantic input.

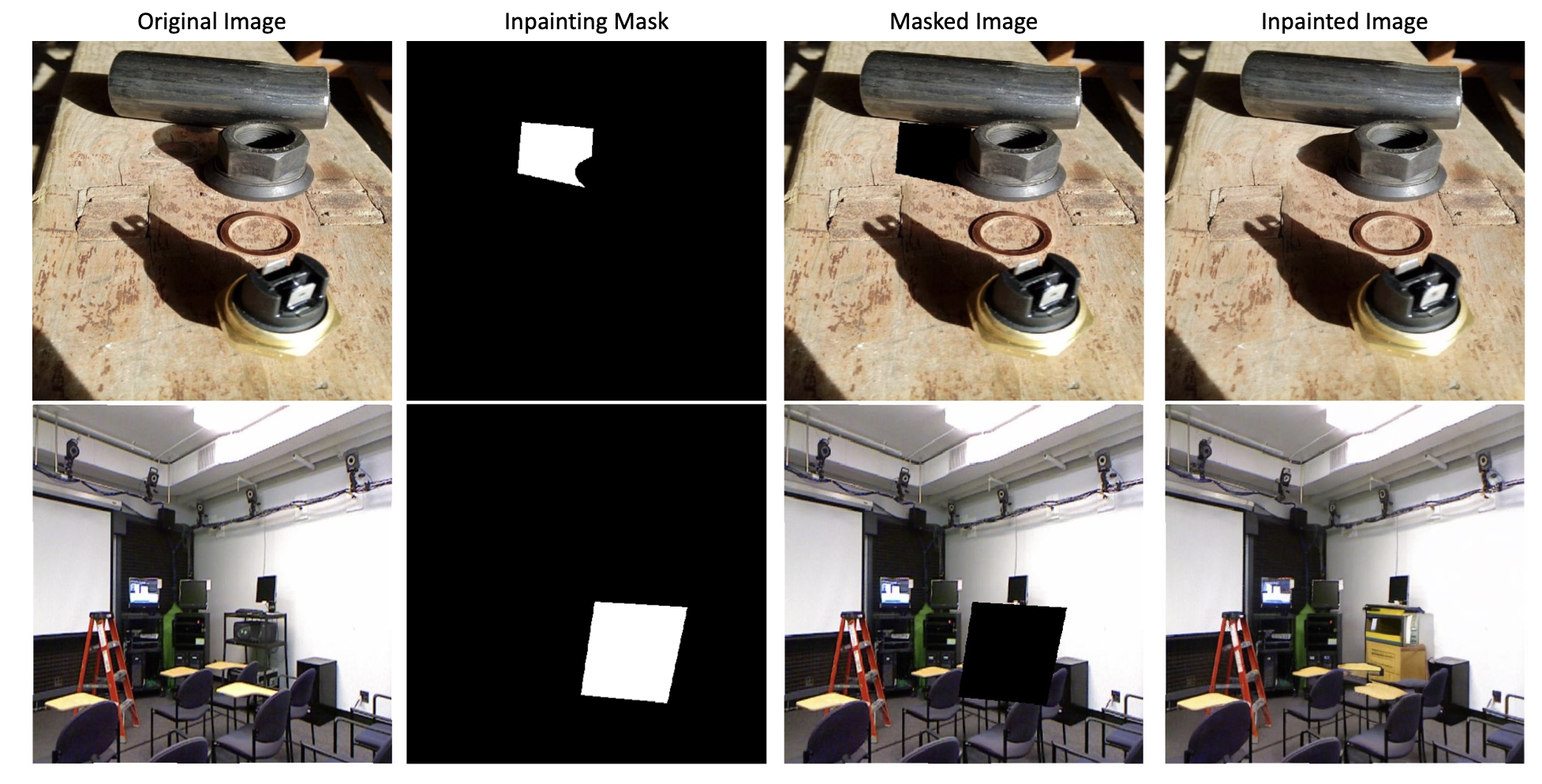

TFill fills in reasonable contents for both foreground object removal and content completion.

A high-level scene understanding system that simultaneously models the completed shape and appearance for all instances.

A GAN inversion model is trained for Stylizing Portraits.

A novel spatially-correlative loss that is simple, efficient and yet effective for preserving scene structure consistency while supporting large appearance changes during unpaired I2I translation.

Given a masked image, the proposed pic model is able to generate multiple and diverse plausible results.

Without using any real depth map, the proposed model evaluates depth maps on real scenes using only synthetic datasets.