NRF Fellowship, National Research Foundation

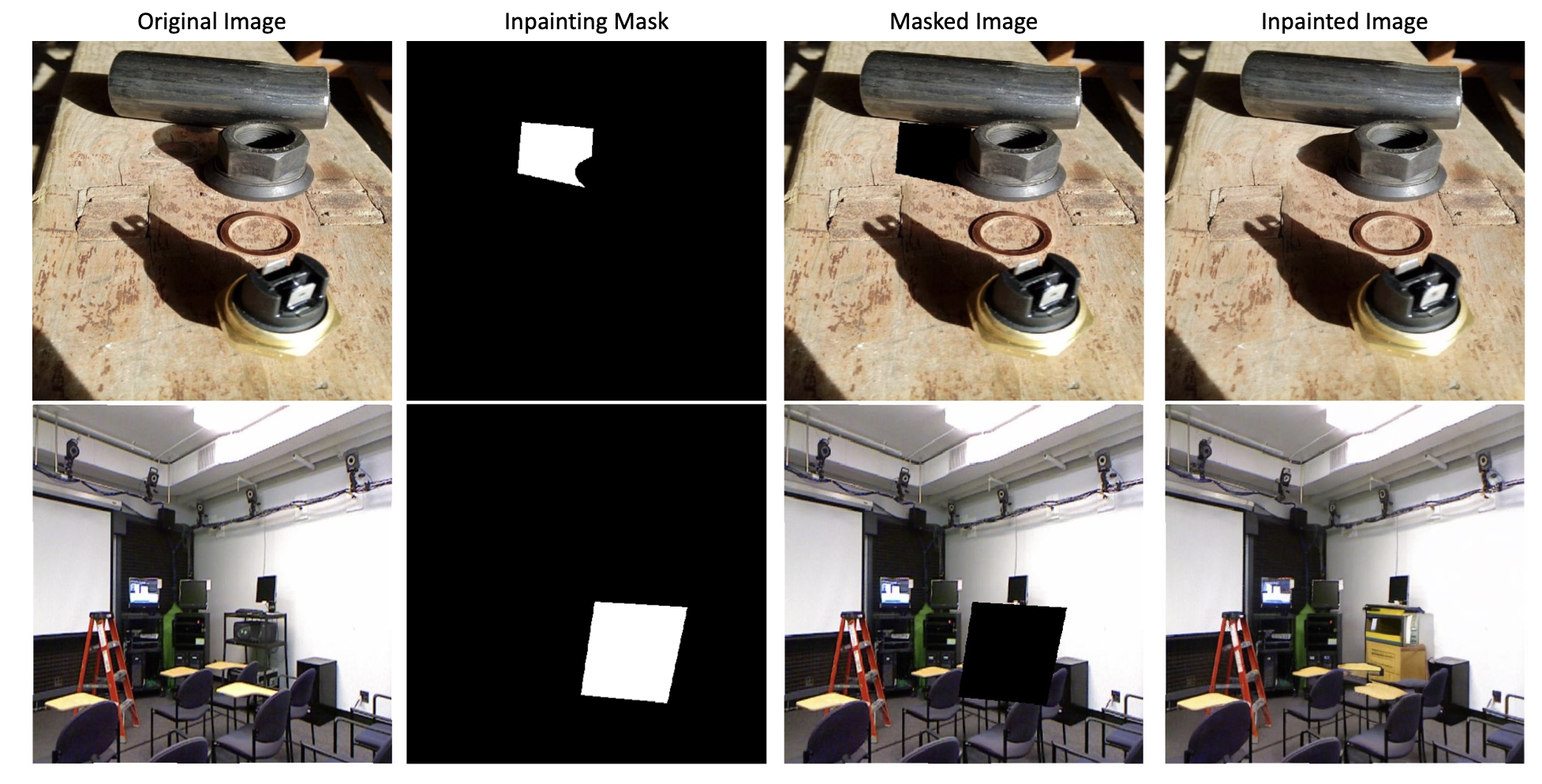

From Pixels to Physics: Integrating Physical Properties in Natural World Creation

National Research Foundation, PI

The grant was awarded on 12/02/2025 (S$ 3,078,720.00), and started on 01/09/2025 and will end on 30/08/2030.

The aim of From Pixels to Physics is to create a realistic natural world adhering to physical principles, rather than dealing with only the realistic pixels as in traditional image, video, and 3D synthesis. Physics-based natural world creation is challenging because it requires a holistic interpretation of scenes and objects within it, including but not limited to appearance, geometry, materials, occlusion, motion, gravity, interaction, mass and sound.